In the preface, I found the following quote, to which I wholeheartedly agree:

Wednesday, July 8, 2015

Whether you like it or not, no one should ever claim to be a data analyst until he or she has done string manipulation.

Saturday, June 20, 2015

Little things that make life easier #9: Using data.entry in r

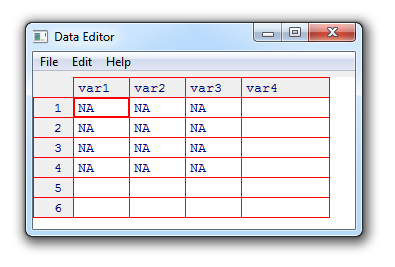

data.entry(), it's easy to visually fill (small) matrices in r.

Let's see it in action. First, I create a 4x3 matrix:

The matrix is created with the cells' values being NA Now, in order to assign values to these cells, I use

This opens a small window where I can enter the data.

This is how the cells were filled before my editing them:

And here's how they looked after my editing them just before I used File > Close:

Back in the shell, the matrix has indeed changed its values:

Pretty cool, imho.

Wednesday, November 12, 2014

The power of k-nearest-neighbor searches

K-NN can be used to classify sets of data when the algorithm is fed with some examples of classifications.

In order to demonstrate that, I have written a perl script. The script creates two csv files (known.csv and

unknown) and a third file: correct.txt. The k-NN algorithm will use known.csv to

train its understanding of a classification. Then, it tries to guess a classification for each record in unknown.csv.

For comparing purposes, correct.txt contains the classification for each record in unknown.csv.

Structure of known.csv

known.csv is a csv file in which each record consists of 11 numbers. The first number is the classifaction for the record. It is a integer between 1 and 4 inclusively. The remaining 10 numbers are floats between 0 and 1.

Structure of unknown.csv

In unknown.csv, each record consists of 10 floats between 0 and 1. They correspond to the remaining 10 numbers in

known.csv. The classification for the records in unkown.csv is missing in the file - it is the task of

the k-NN algorithm to determine this classification. However, for each record in unknown.csv, the correct classification

is found in correct.txt

Values for the floats

A record's classification determines the value-ranges for the floats in the record, according to the following graphic:

The four classifications are represented by the four colors red, green, blue and orange. The Perl script generated eight floats. For range for the first float for the red classification is [0.15,0.45], the range for the first float for the green classification is [0.35,0.65] etc. Similarly, the range for the second value of the red classification is [0.55,0.85] and so on.

To make things a bit more complicated, two random values in the range [0,1] are added to the eight values resulting in 10 values. These two values can either be at the beginning, at the end or one at the beginning and the other at the end.